Portfolio

Check out some of the projects I have worked on.

Computer Vision Applications

Computer Vision OCR and Face Detection

– Most technical information involving this project has been omitted to protect confidentiality–

Computer vision has lots of applications when it comes to logistics and on demand ordering services, it can be used to look at photos of drivers licenses to extract their information, or it can be used to verify that the picture of the delivery drop off is successful.

This project used OCR to detect driver registration information to automate the driver registration process. With a single driver license, what would be the best algorithm to go about doing so?

Starting with the basics, first we need to figure out whether or not there is a driver license in the photo to begin with! This requires a basic object detection, and to define the bounding boxes of the driver license within the image to ensure that we have gotten the correct orientation of the driver license.

Popular object detection algorithms include: YOLO, R-CNN, RetinaNet.

Since we know the size of the driver license, we can take the aspect ratio as a tell for if the simple object detection algorithm detects a rectangle in the image, is the rectangle a street sign or is it a driver license? At the beginning we dont care, we just need to make sure the image is in the correct orientation and size in the photo.

To determine if the box in our image is a driver license or just a postcard, this is when we deploy OCR technology. Google Cloud, Azure Computer Vision and AWS Textract all provide great OCR libraries that we can just call and use with our respective accounts. We can also look elsewhere at 3rd party open source OCR libraries, such as Tesseract or EasyOCR are other great options.

Simple OCR call for AZURE:

subscription_key = os.environ["VISION_KEY"]

endpoint = os.environ["VISION_ENDPOINT"]

computervision_client = ComputerVisionClient(endpoint, CognitiveServicesCredentials(subscription_key))

'''

OCR: Read File using the Read API, extract text - remote

This example will extract text in an image, then print results, line by line.

This API call can also extract handwriting style text (not shown).

'''

print("===== Read File - remote =====")

# Get an image with text

read_image_url = "https://learn.microsoft.com/azure/ai-services/computer-vision/media/quickstarts/presentation.png"

# Call API with URL and raw response (allows you to get the operation location)

read_response = computervision_client.read(read_image_url, raw=True)

# Get the operation location (URL with an ID at the end) from the response

read_operation_location = read_response.headers["Operation-Location"]

# Grab the ID from the URL

operation_id = read_operation_location.split("/")[-1]

# Call the "GET" API and wait for it to retrieve the results

while True:

read_result = computervision_client.get_read_result(operation_id)

if read_result.status not in ['notStarted', 'running']:

break

time.sleep(1)

# Print the detected text, line by line

if read_result.status == OperationStatusCodes.succeeded:

for text_result in read_result.analyze_result.read_results:

for line in text_result.lines:

print(line.text)

print(line.bounding_box)

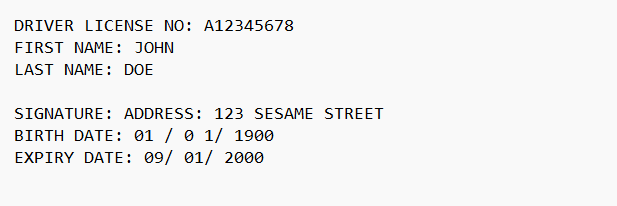

The OCR will just return the raw text data, it might be something like:

Which would be very different then if a post card was detected it would probably show something like:

We can use this differences to tell us what we are looking at in the image and also to detect the correct words. We can use regex to find key words such as address and name and gather the data.

From there we can look for drivers who might share the same driver license, invalid expiry dates or invalid driver license numbers. This project can manually typing and review of a team to just minutes of computer vision review.

As a machine learning engineer, aside from learning and knowing how and when to which algorithms, another aspect of machine learning entirely is computer vision. Computer Vision is almost a completely new subset of machine learning, like going from linear algebra to trigonometry, both are math subjects but both have their own set of equations and formulas that you need to learn. Although similar, being good at linear algebra doesnt mean you are good at trigonometry.

Libraries:

Some of the tools required to make this project work:

Python - Python is a programming language that lets you work quickly and integrate systems more effectively.

OpenCV - OpenCV is a library of programming functions mainly for real-time computer vision. Originally developed by Intel, it was later supported by Willow Garage, then Itseez…

Pandas - Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language.

AWS Sagemaker - Amazon SageMaker is a cloud-based machine-learning platform that allows the creation, training, and deployment by developers of machine-learning models on the cloud. It can be used to deploy ML models on embedded systems and edge-devices.

GCP Vision - The Vision API can detect and extract text from images. There are two annotation features that support optical character recognition (OCR)

Azure VisionAccelerate computer vision development with Microsoft Azure. Unlock insights from image and video content using OCR, object detection, and image analysis.

Fraud Detection Analysis

How do you know if users are abusing your system?

– Most technical information involving this project has been omitted to protect confidentiality–

Fraud detection and prevention is a necessary step for all applications, businesses and services. From food delivery, insurance to sports and crypto apps, all need layers of security to ensure that users are safe and to catch fraudulent activity when it happens.

In the case of transportation and logistics apps, the fraud detection is needed to ensure drivers and users are not making multiple accounts, are not making fraudulent orders or drivers trying to scam users on our platform. Traditionally, this type of work was manually conducted, using CSVs and pivot tables, complex operations of comparison to determine if users had shared characteristics with other users, or manually go through the flow of driver behaviour to determine the fraud.

Utilizing deep learning, we can now determine if users or drivers are trying to commit fraud without having to manually scan through hundreds of thousands of users. Looking deeper into the user and driver behaviour features, to find patterns that normally humans would have trouble discovering on our own. Utilizing deep learning feature selection to narrow down the most important features in detecting fraud, with techniques such as PCA, as well as look for features that users might have in common.

Simple fraud detection starts with looking at banking information, if payments get transfered, when users or drivers make silly mistakes of misusing the application.

Once we have established a baseline for fraudsters, we can use more complex techniques and algorithms such as feature engineering to find more drivers and users we fit the fraudster profile.

A simple random forest classifier supervised model

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Splitting the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initializing the Random Forest classifier

clf = RandomForestClassifier()

# Training the classifier

clf.fit(X_train, y_train)

# Predicting on the test set

y_pred = clf.predict(X_test)

Looking for users and drivers that attempt to place orders for free. Risk scoring to look for the frequency, location, and past behavior.

Looking for relationships between accounts, users and devices to find unusual connections and clusters. As well as identity verification, to check if individuals are who they claim to be.

The data was then run through exploratory data analysis: simple correlation operations to determine the feature with the greatest correlation to fraud detection.

Fraud detection

Libraries:

Some of the tools required to make this project work:

Python - Python is a programming language that lets you work quickly and integrate systems more effectively.

OpenCV - OpenCV is a library of programming functions mainly for real-time computer vision. Originally developed by Intel, it was later supported by Willow Garage, then Itseez…

Pandas - Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool,

built on top of the Python programming language.

AWS Sagemaker - Amazon SageMaker is a cloud-based machine-learning platform that allows the creation, training, and deployment by developers of machine-learning models on the cloud. It can be used to deploy ML models on embedded systems and edge-devices.

Renewable Energy Prediction

Prediction of Solar and Wind Renewable Energy using Deep Learning

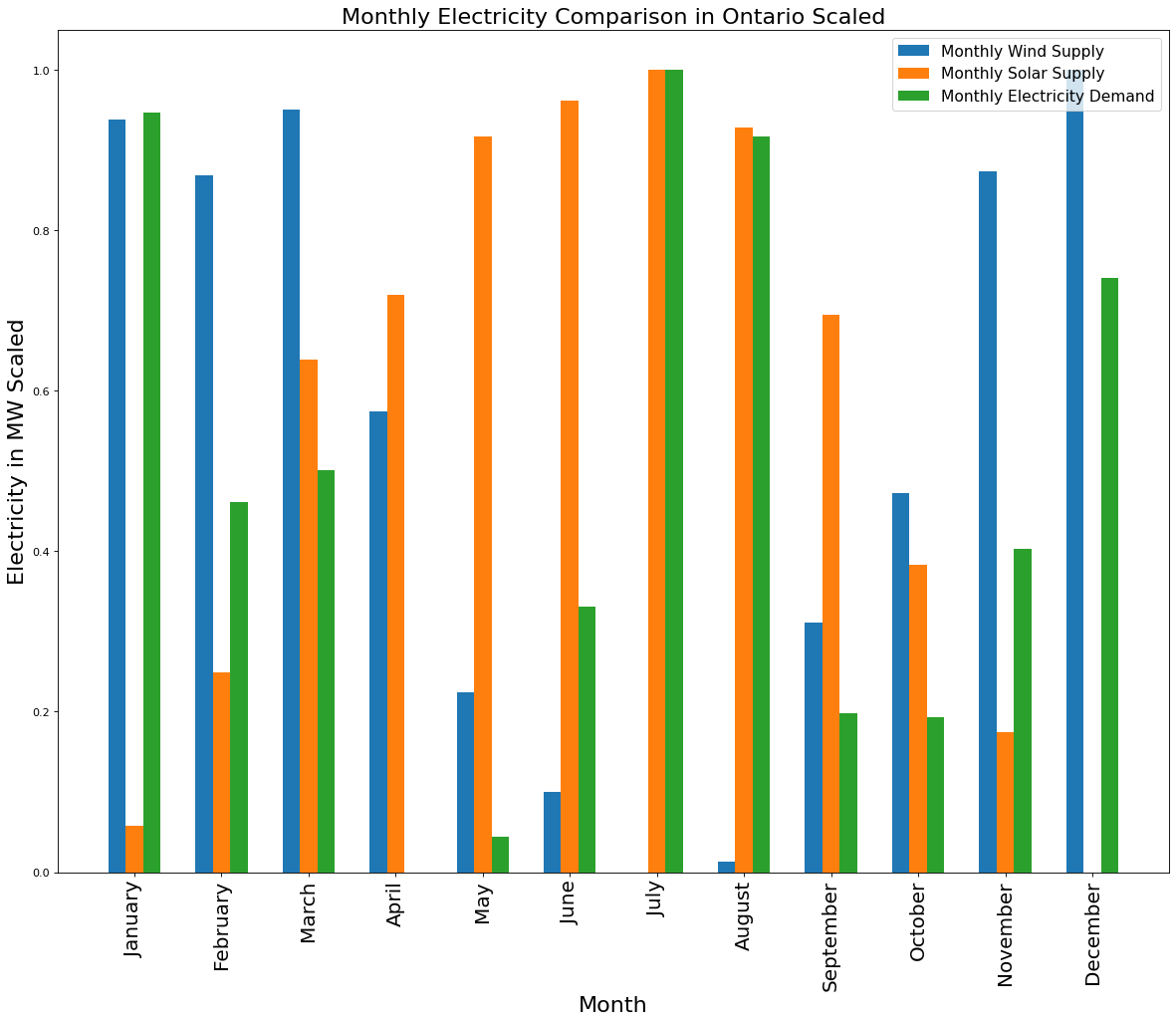

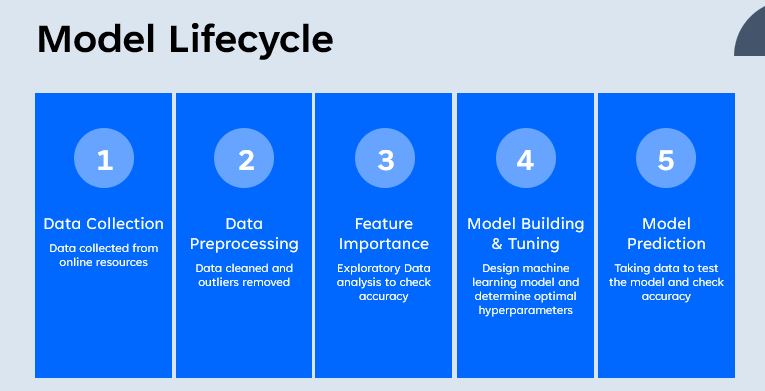

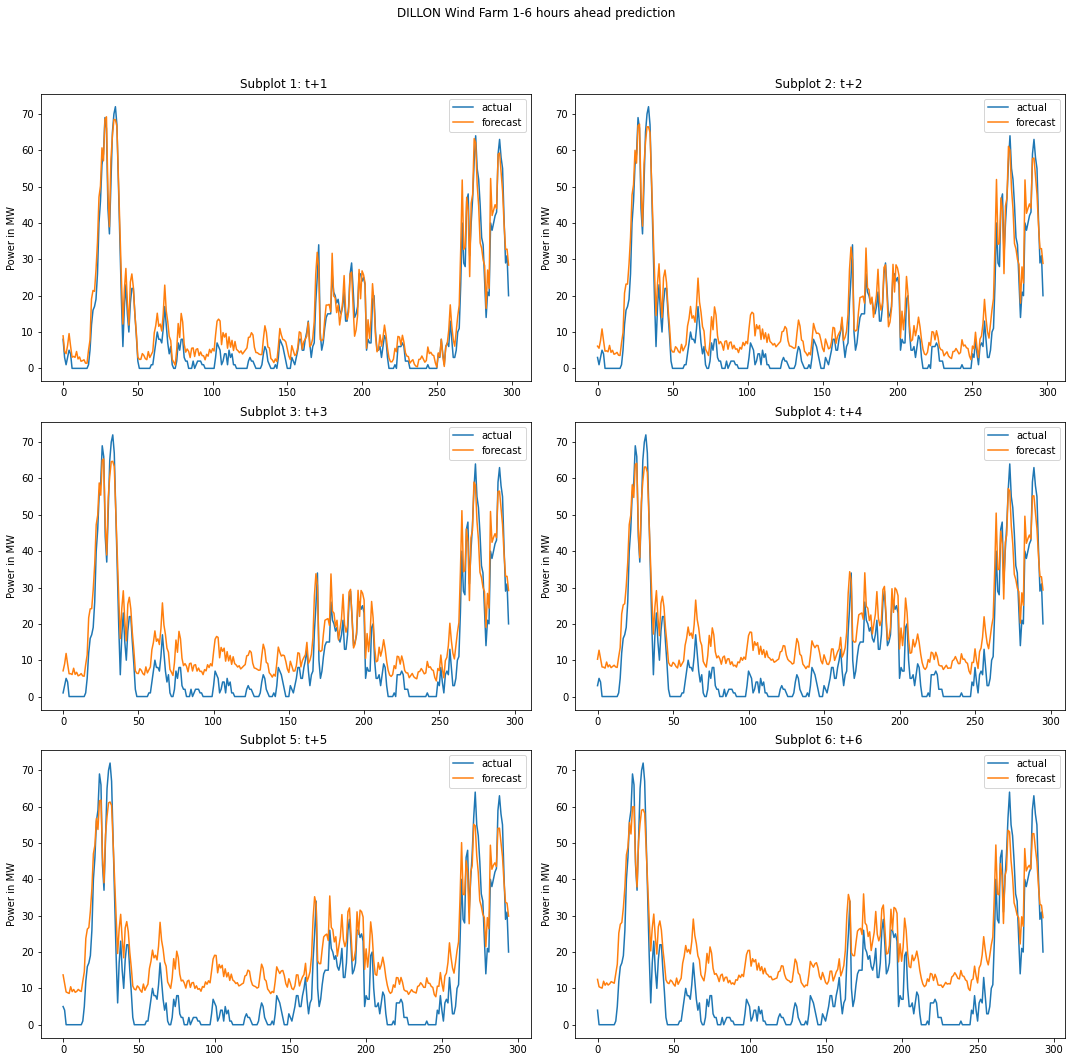

Long Short Term Memory (LSTM) model was developed to monitor short term power output predictions from 4 hour ahead to 6 hours ahead. This research paper demonstrated a unique approach of utilizing nearby publicly available meteorological

data to predict renewable energy power output that could potentially be used for wind and solar farm scheduling to prevent curtailment of clean energy.

This paper was written for completion of my Masters major research project under Toronto Metropolitan University and as a result the paper cannot be shared publicly without explicit permission from the University. It was written in Python with Pandas, Numpy, Tensorflow and other supporting libraries.

Questions to be Answered:

- Can all of Ontario’s power demands be met with renewable energy?

- What are the key features for determining renewable energy?

- What would be the best algorithm choice to predict renewable energy?

The dataset used was collected from the [Independent Electricity System Operator] and the [NASA Solar Satelitte dataset.]

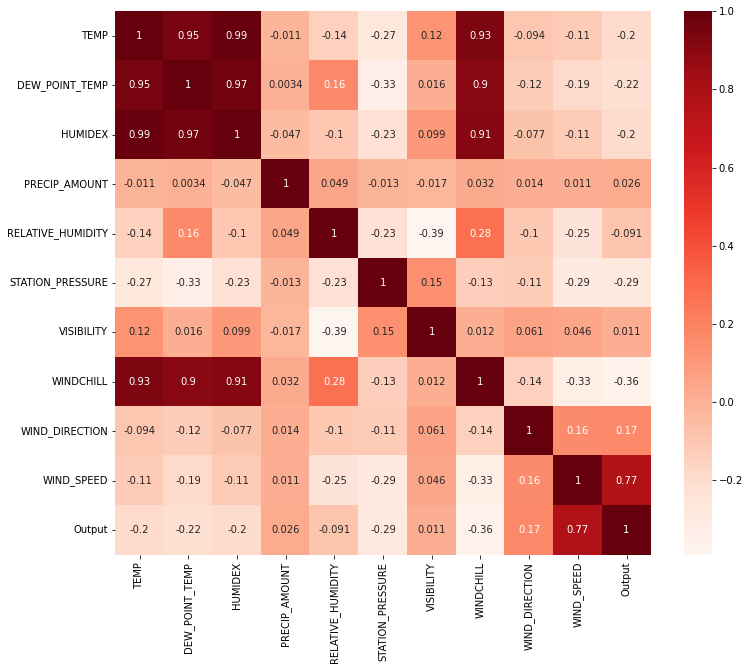

The dataset made up of approximately 50 meteorological features total over a 4 year time period. Notable features such as wind speed, solar radiation, air temperature, dew point temperature and humidity all played a role in determining total solar and wind power generation capacity.

The data was then run through exploratory data analysis: simple correlation operations to determine the feature with the greatest correlation to wind power. The data was also cleaned and outlier values were either removed or replaced with average values.

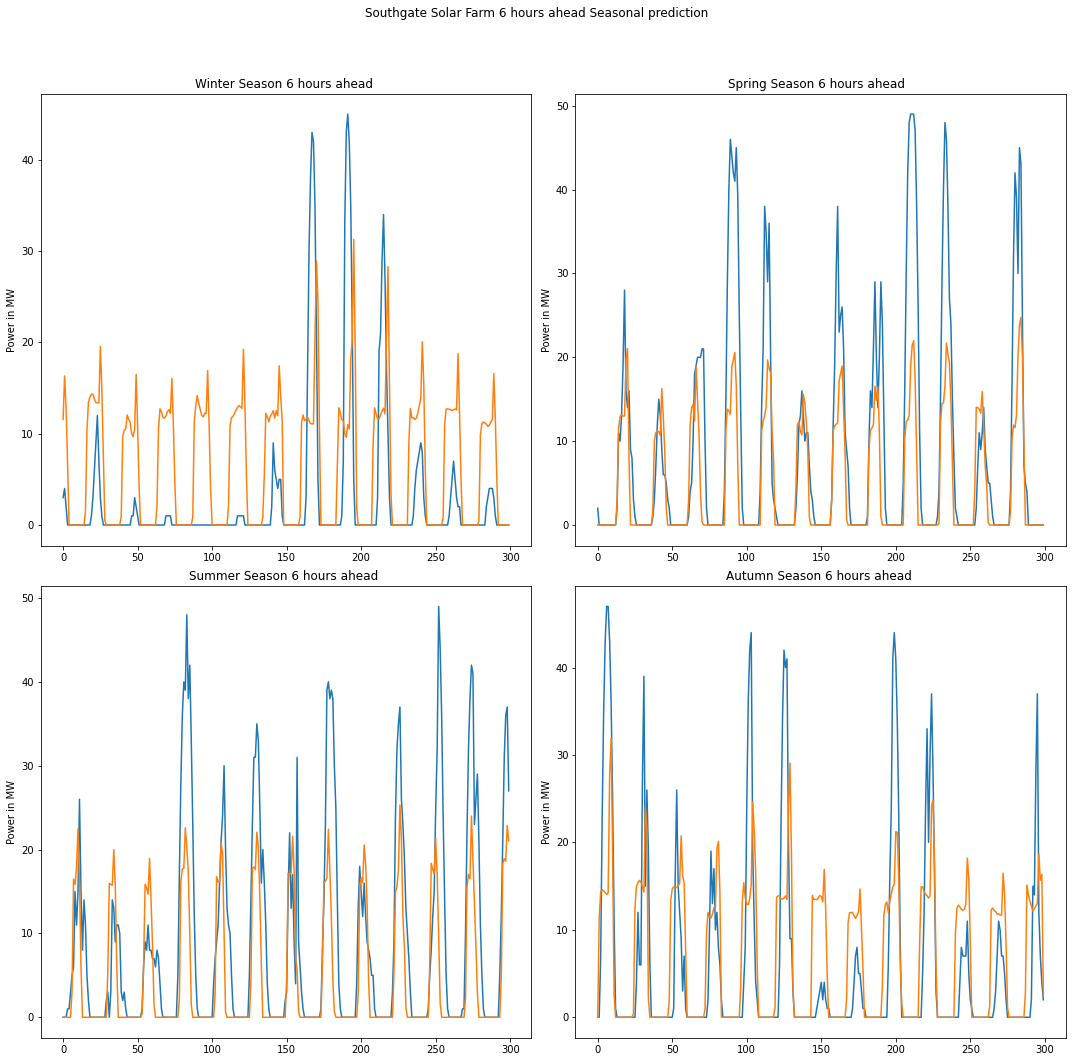

The Long Short Term Memory LSTM deep learning algorithm is trained on 3 years worth of data, with the 4th year being the validation/ testing year. This results in a 75% training, 25% testing split. The benefit of using LSTM networks over a traditional mathemetical model such as the ARIMA (Autoregression Integrated Moving Average), is that there doesnt need to be a processing step to remove trends and seasonality.

The Long Short Term Memory algorithm requires a number of timesteps, also known as an input window size. To determine how many past values of the series will be used for the current prediction. For example, if i wanted to predict 2 pm, should consider weather at 1 pm, 12 pm, and 11 am as my past values, meaning my window size is 3 hours.

Here we can see the output result for the solar farm, seperated by season. The algorithm is predicts the next 4 hours ahead with relative certainity. The wind farm is also predicted and both results are shown below.

Additional work would be to include more weather variables and try utilizing a CNN model to the algorithm before utilizing the LSTM.

Libraries:

Some of the tools required to make this project work:

Python - Python is a programming language that lets you work quickly and integrate systems more effectively.

Scikit-Learn - An open source Machine learning library in python used for data preprocessing, classification, regression, etc…

Pandas - Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool,

built on top of the Python programming language.

Jupyter Notebooks - web-based interactive development environment for notebooks, code, and data.

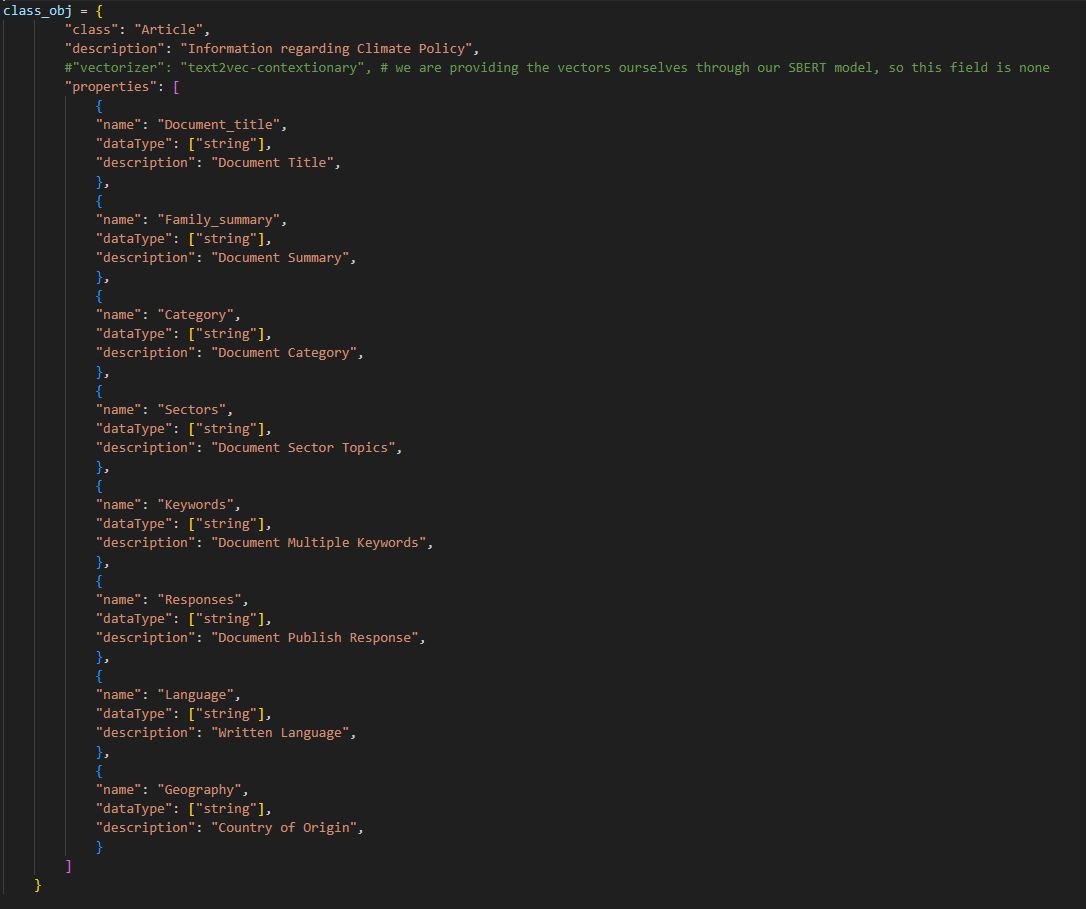

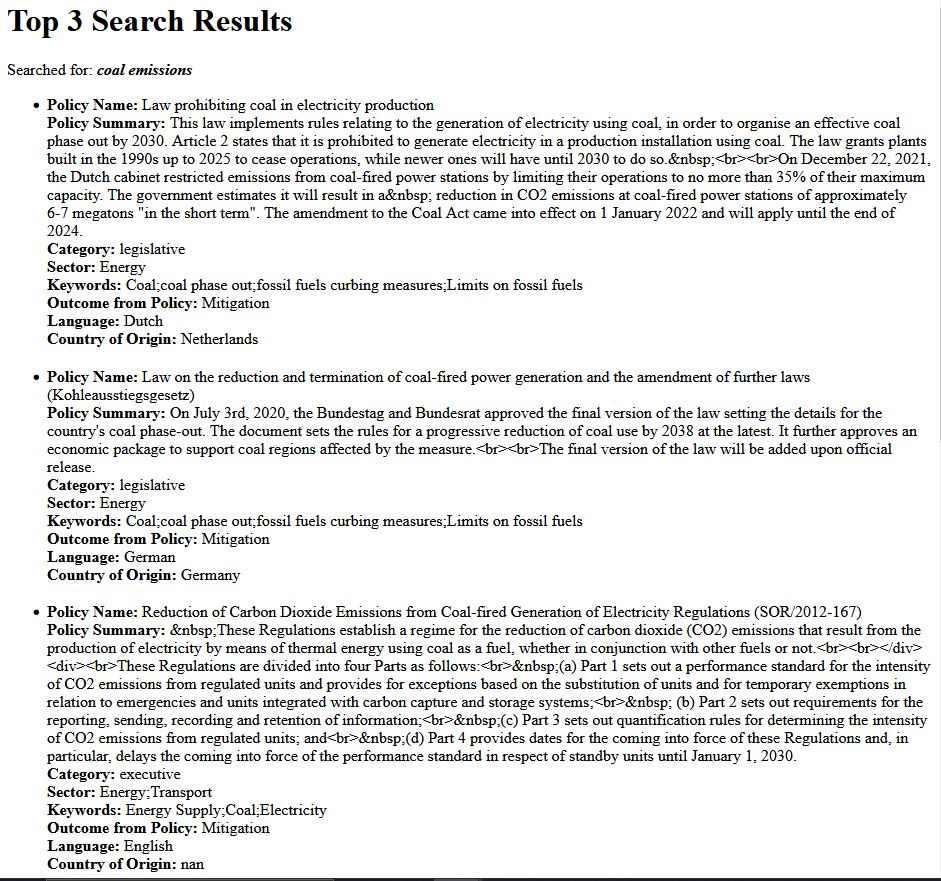

Climate Policy Vector Database

Vector Embeddings for climate policy legislation

Vector embeddings are essentially converting text or other forms of unstructured data such as audio and images into a high dimension matrix. This high dimensional space is known as an embedding, and can be used to increase the speed and accuracy of search results, as compared to semantic search. In a vector database, queries that have similarities are put together based on similarity, just like K nearest neighbours which makes them much faster to find compared to relational databases. With the ongoing development of technologies such as ChatGPT and other Large Language Models, there is an increasing need for an upgrade from the data base side to match the speed of the models. The enormous number of attributes and features that the model has to draw insights for is endless and take time and computing power to query searches. To solve this issue, using a specialized database that can provide a vector embedding and essentially group the data by semantic meaning, like a K nearest neighbour vector match to draw insights quickly and more accurately than a semantic database.

This project utilized the Climate policy radar database, which consisted of over 4000+ policies and papers that were written regarding climate.

As a researcher for example, almost always when you have a thesis or journal paper or novel idea, then you have to find background articles, papers that other people wrote that are similar but not exactly the same. This can be difficult to find as your idea is novel and assuming you are the first one to write a paper about it, it would not show up on a typical search engine, This is where vector embedded search comes in, if you were to search your paper details, you would be able to find the 10+ journal papers that have the relative closeness of your paper, and reference them much faster.

Objectives

- Build a database based on the Climate Radar Policy

- Create vector embeddings for the climate radar policy features

- Write a python flask search application to query and display results back to users

Method

It was written in Python, using Docker with Weaviate and other supporting libraries.

A docker container was created for all its dependencies. The dataset used was collected from the [Climate Policy Radar]

The dataset made up of approximately 4000+ journals, laws and papers totaled over a 4 year time period. Notable features include, name, summary, category, sector, language, country of origin and outcome of the policy.

class_obj = {

"class": "Article",

"description": "Information regarding Climate Policy",

"properties": [

{

"name": "Document_title",

"dataType": ["string"],

"description": "Document Title",

},

{

"name": "Family_summary",

"dataType": ["string"],

"description": "Document Summary",

},

{

"name": "Category",

"dataType": ["string"],

"description": "Document Category",

},

{

"name": "Sectors",

"dataType": ["string"],

"description": "Document Sector Topics",

},

{

"name": "Keywords",

"dataType": ["string"],

"description": "Document Multiple Keywords",

},

{

"name": "Responses",

"dataType": ["string"],

"description": "Document Publish Response",

},

{

"name": "Language",

"dataType": ["string"],

"description": "Written Language",

},

{

"name": "Geography",

"dataType": ["string"],

"description": "Country of Origin",

}

]

}

The data was then run through exploratory data analysis: simple data cleaning and text organizing then uploaded to the schema as well.

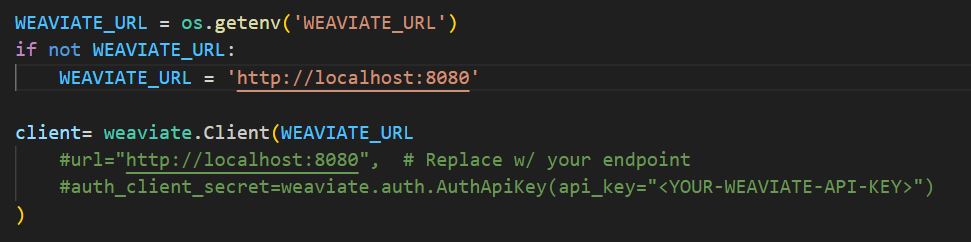

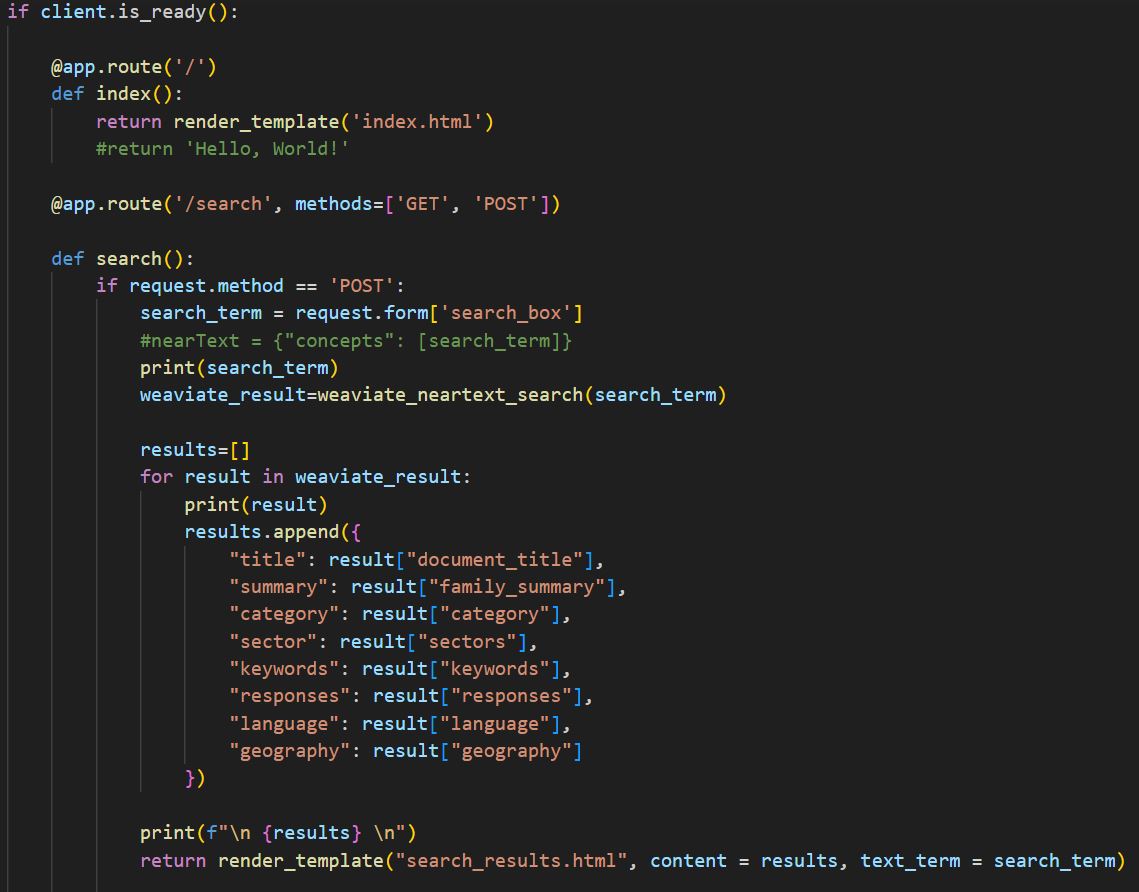

Flask application

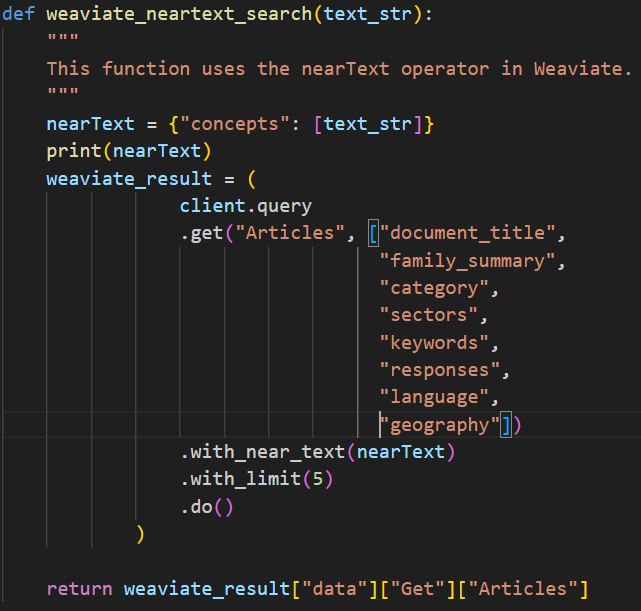

The flask application is connected to the Weaviate Client.

Here the Weaviate nearText operator is used, to search for text that has the closest semantic meanings to the query that the user searched for. The response from the search query will include the closest objects in the climate policy class. The output response is then taken as the name, summary and details of the selected journal article.

Lastly the flask app front end and back end is created. Firstly the backend for POST requests from Weaviate is created and the search function to return the results from the client and render the page.

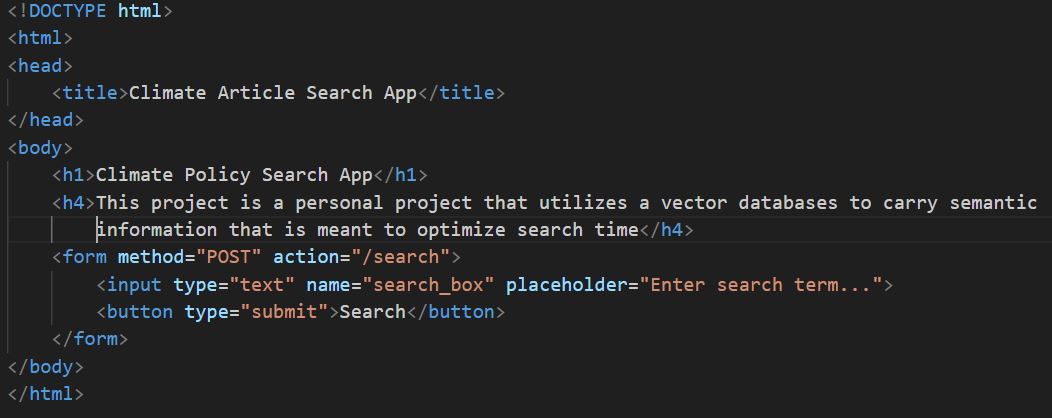

The index.html file

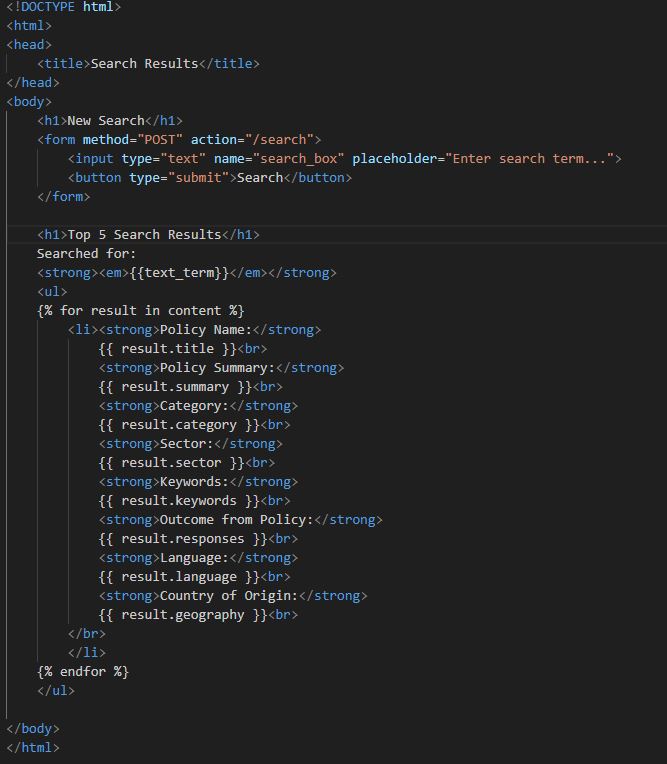

The search results.html file

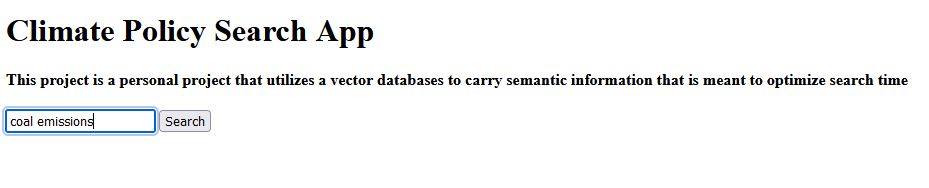

The final website functionality

With relative ease of use, I have created a fully functional vector embedded search engine, for any database files.

This application is not limited to climate policy radar search engine data, the possibilities such as finding shoes that are similar to the ones you own, finding animals that look alike each other. The extensive possibilities for these technologoies with large language models such as chatGPT make it endless.

Libraries:

Some of the tools required to make this project work:

Python - Python is a programming language that lets you work quickly and integrate systems more effectively.

Weaviate - Weaviate is an open-source vector database. It allows you to store data objects and vector embeddings from your favorite ML-models, and scale seamlessly into billions of data objects.

Docker - Docker is a platform designed to help developers build, share, and run modern applications.

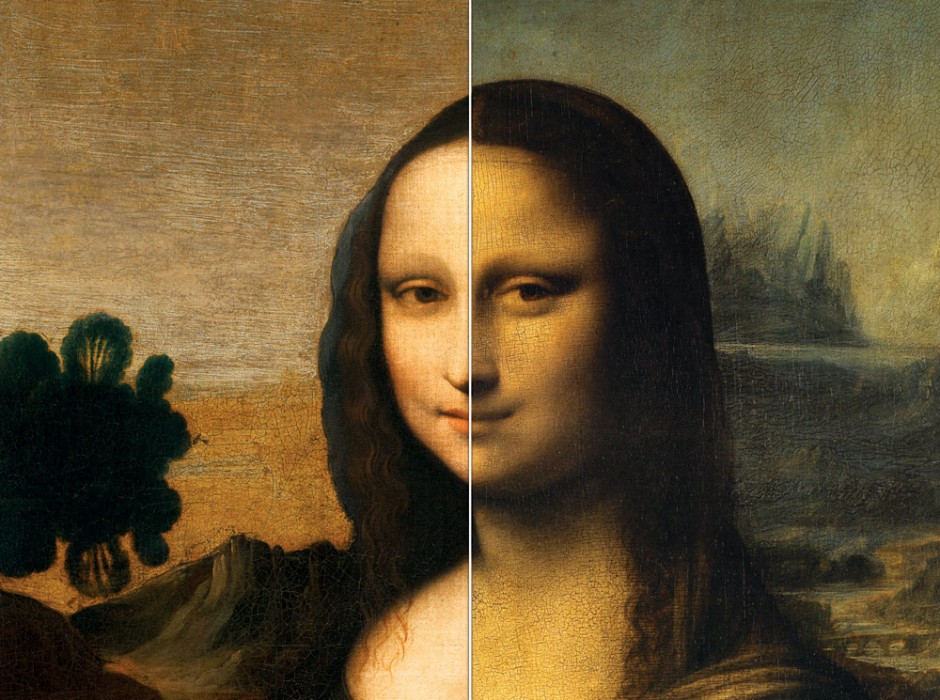

Robotic Painting Quality Control

How do you tell if two paintings are the same?

Museum curators spend millions of dollars every where to determine fake paintings from the real ones. Teaching curators to spot fake paintings and determine real stroke marks has always been a major challenge even with carbon dating. Utilizing computer vision to devise a method to compare painting stroke types or to perform some preliminary sweeps before a human will need to go in and look at the paintings, similar to applicant tracking systems and filtering resumes and cover letters before they are ever seen by a real person.

Some questions that needed to be answered were:

- Are there methods to infer what paint brush was used for a specific paint stroke?

- What is the best way to tell between two brush strokes with the exact same colour, and the exact same brush?

Starting with creating a pipeline to set up data base and data segmentation. Developing a dataset of paintings and individual paint strokes for comparison.

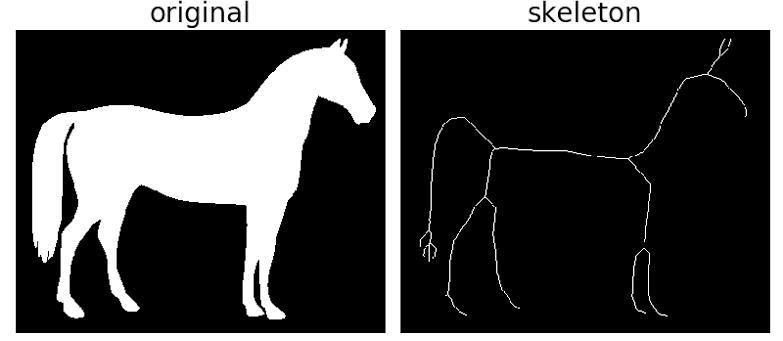

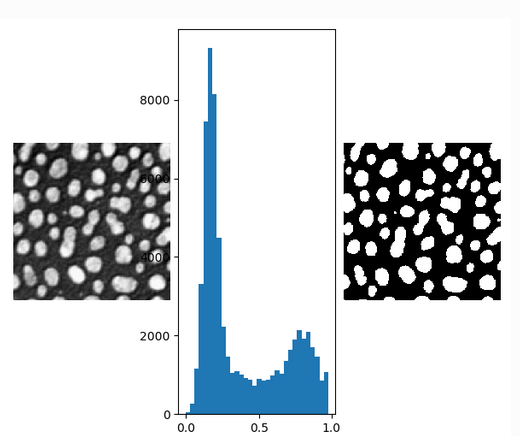

Multiple comparison scores are developed using OpenCV2. Colour matching, skeletoning, and texture detection are all run through the library to compare two paint strokes. Produced visual reports and comparison data to give an overall score on painting stroke types.

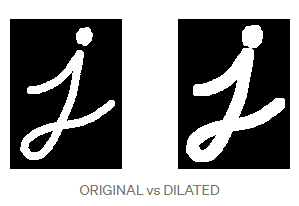

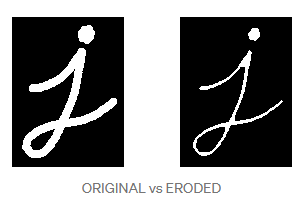

Skeletionization of stroke types using basic morphological operations

Performing thresholding, first converting an image to grayscale then into a binary image. Choosing a specific threshold, and pixels with values greater than the threshold will be white (255), and similar will be converted to black (0).

With thresholding only, there are parts of the image that might not be what we are looking for. In the example above notice that there are pixels between the cell images that we are not interested in. To remove these unwanted pixels, running basic morphological operations on the thresholded images.

Dilating and Erosion:

Dilating adds pixels to the boundaries of objects while erosion removes pixels on the object boundaries.

Performing simple morphological operations after thresholding to get skeletonized images of paint strokes. Then running comparison of the skeletonized images by pixel values to compare paint strokes.

This was one of the many techniques used to compare images. Multiple additional processes done to compare the strokes provided a numerical percentage value of likeness. The full repository is private for company usage but this was simply an example of the operatons completed.

Potential future work is the utilization of GANs to create and mimic the paintings, then have the algorithm compare between the original paining the GAN created painting.

Libraries:

Some of the tools required to make this project work:

OpenCV2 - An open source computer vision and machine learning software library.

Scikit-Learn - An open source Machine learning library in python used for data preprocessing, classification, regression, etc…

Jupyter Notebooks - web-based interactive development environment for notebooks, code, and data.

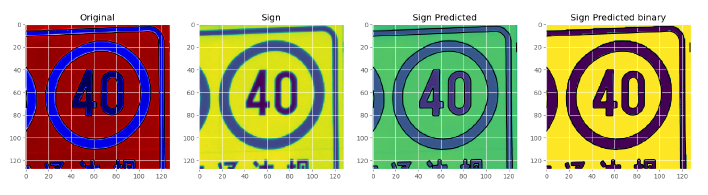

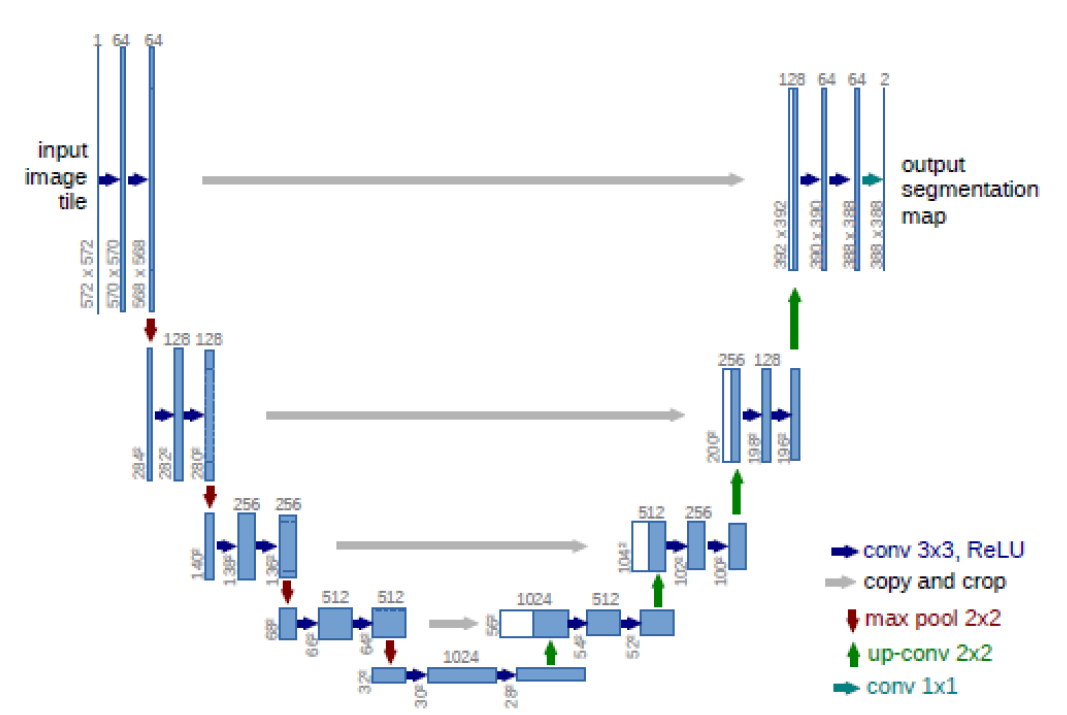

UNet Image Segmentation

Academic Research Project utilizing unet algorithm to conduct image segmentation aganist traffic light data

This paper advances and builds on existing knowledge to introduce a novel solution with an already existing algorithm for detecting a traffic sign and estimate the boundary within and input image. Estimating the boundary of traffic signs within an image and classification of a shape class prediction, solved using a CNN. The proposed algorithm and method using a U-Net CNN is compared aganist existing CNN methods in terms of computational time, and accuracy of detection.

Utilizing the UNet algorithm that is traditionally used for Medical Imaging, and utilizing it for image segmentation of traffic sign data. The Data sets were gathered from Kaggle opensource datasets and the training was done off a jupyter notebook.

The model was trained using tensorflow, building the model and tweaking the model configurations to maxmize model performance. I utilizied a Rectified Linear Unit Activation (ReLu) in between Convolution layers then trained for only 30 epochs as the model would reach reach maximum accuracy relatively quickly.

More details about the model can be found in the paper.

Libraries:

Some of the tools required to make this project work:

MySQL - MySQL is a domain-specific language used in programming and designed for managing data held in a relational database management system.

TensorFlow - TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks

Jupyter Notebooks - web-based interactive development environment for notebooks, code, and data.

Dog Breed Identification

Prediction and Detection of Different Dog Classes

Image classification has many excellent datasets for determining all types of classes, from humans, to airplanes to cats. VOC2012, a competition known as the Visual Object Classes Challenge to build a image database that has 20 clases ranging from persons, animals, vehicles and indoor furnitures. There is a classification for dogs, but not for dogs, so building upon this competition I wanted to train my own model to predict dog breeds.

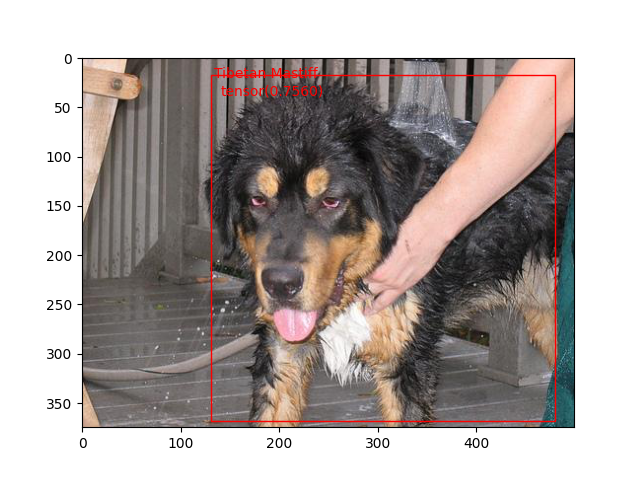

This paper is built off of [R-CNN: Towards Real-Time Object Detection with Region Proposal Networks] written in PyTorch to determine the bounding boxes and prediction values for the dog breeds.

Some questions that needed to be answered were:

- How many dog breeds are trained?

- How is the data stored and organized, how are the features extracted.

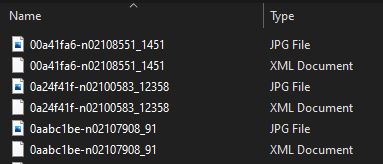

The dataset used was collected from the [Stanford Dogs Dataset] with total of 120 categories, with annotations for class labels and bounding boxes in PASCAL VOC format. This meant the data needed to be in XML and JPG files like the following.

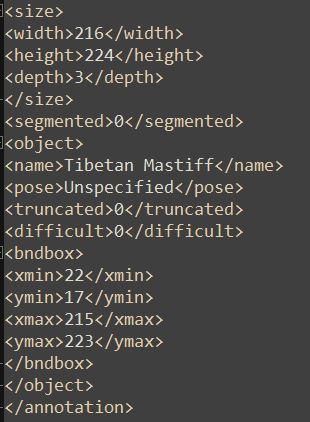

For each image, there is an XML file that defines the size of the image, the class name as well as the bounding box location.

The data was then run through simple preprocessing morphological operations such as rotating, shifting as well as mirroring the images, to increase the size of the dataset and provide the algorithm with even more input data.

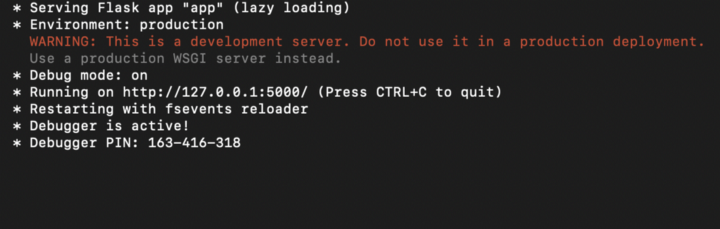

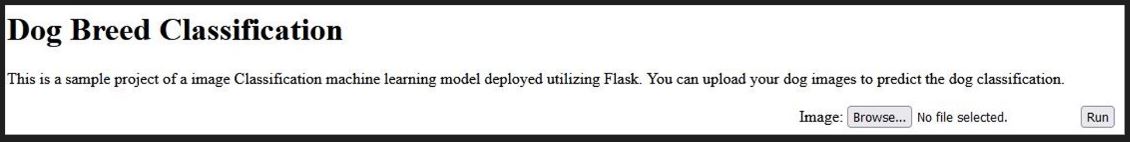

The machine learning algorithm is trained with the new dog breed classes and is compiled to learn and write the bounding boxes as an output. Postman is used as the testing and API call as it is upload to a localhost web server to try to receive POST calls from the server.

Here we can see the output result, the dog breed, as well as the prediction result in a tensor. The algorithm is 75% certain that this is an image of a Tibetan Mastiff, which is correct. This project was a demonstration of creating and replicated an algorithm from a journal article, as well as usage of an API platform for building a machine learning API in the future.

Additional work would be to include much more dog classes to the algorithm for training as well as work on the accuracy of the model to have higher certainty of the prediction. In addition, making the webpage look alittle better than a blank page with text. The github will continue to be updated to make these changes.

Libraries:

Some of the tools required to make this project work:

OpenCV2 - An open source computer vision and machine learning software library.

Scikit-Learn - An open source Machine learning library in python used for data preprocessing, classification, regression, etc…

Postman - Postman is an API platform for building and using APIs. Postman simplifies each step of the API lifecycle and streamlines collaboration

Jupyter Notebooks - web-based interactive development environment for notebooks, code, and data.

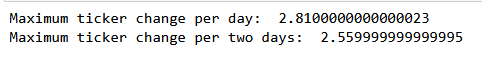

NASDAQ Stock API Tracker

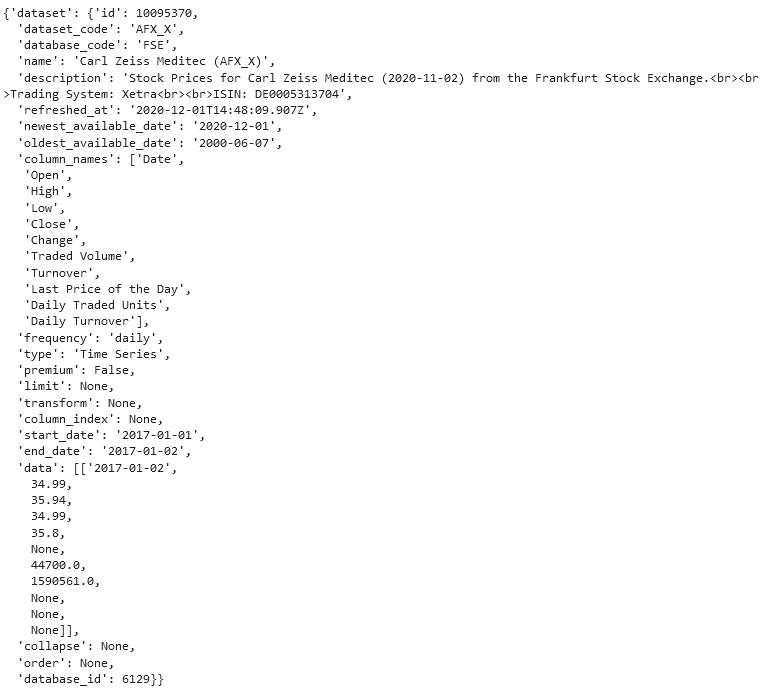

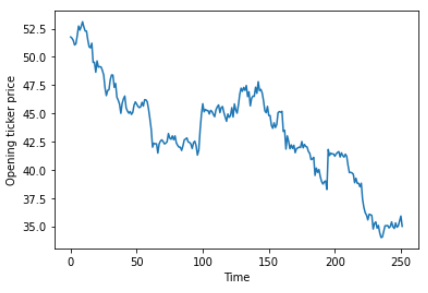

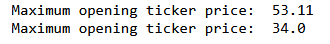

Utilizing NASDAQ API to collect data on specific stock markets and specific companies, then perform simple elementary analysis on the json data

NASDAQ data set will focus on the Frankfurt Stock Exchange (FES) and analyze the stock prices of a company named Carl Zeiss Meditec, which manufactures tools for eye examinations, as well as medical lasers for laser eye surgery: https://www.zeiss.com/meditec/int/home.html. The company is listed under the stock ticker AFX_X.

Simple Exploratory data analysis was conducted, answering simple questions like:

What is the highest and lowest opening prices of stocks within a certain time period?

To be completed…

More details about the data preprocessing can be found in the public github: Github Project Link

Libraries:

Some of the tools required to make this project work:

Python GET requests - Python Requests GET API Methods

Jupyter Notebooks - web-based interactive development environment for notebooks, code, and data.

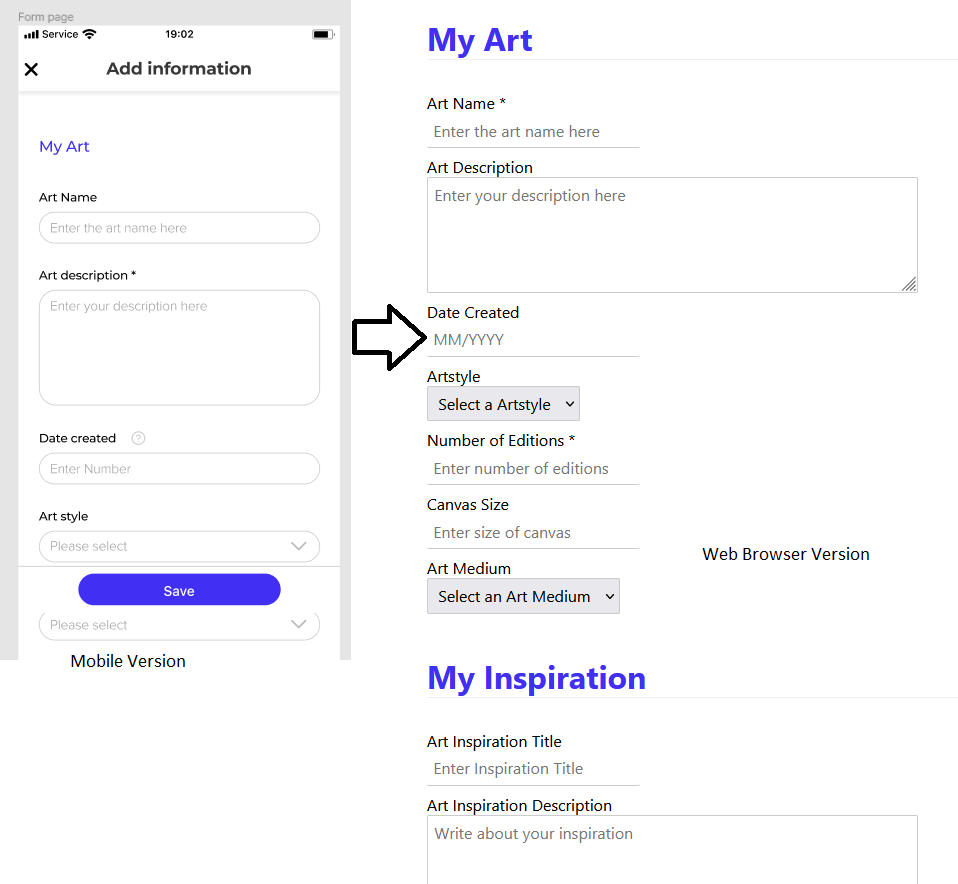

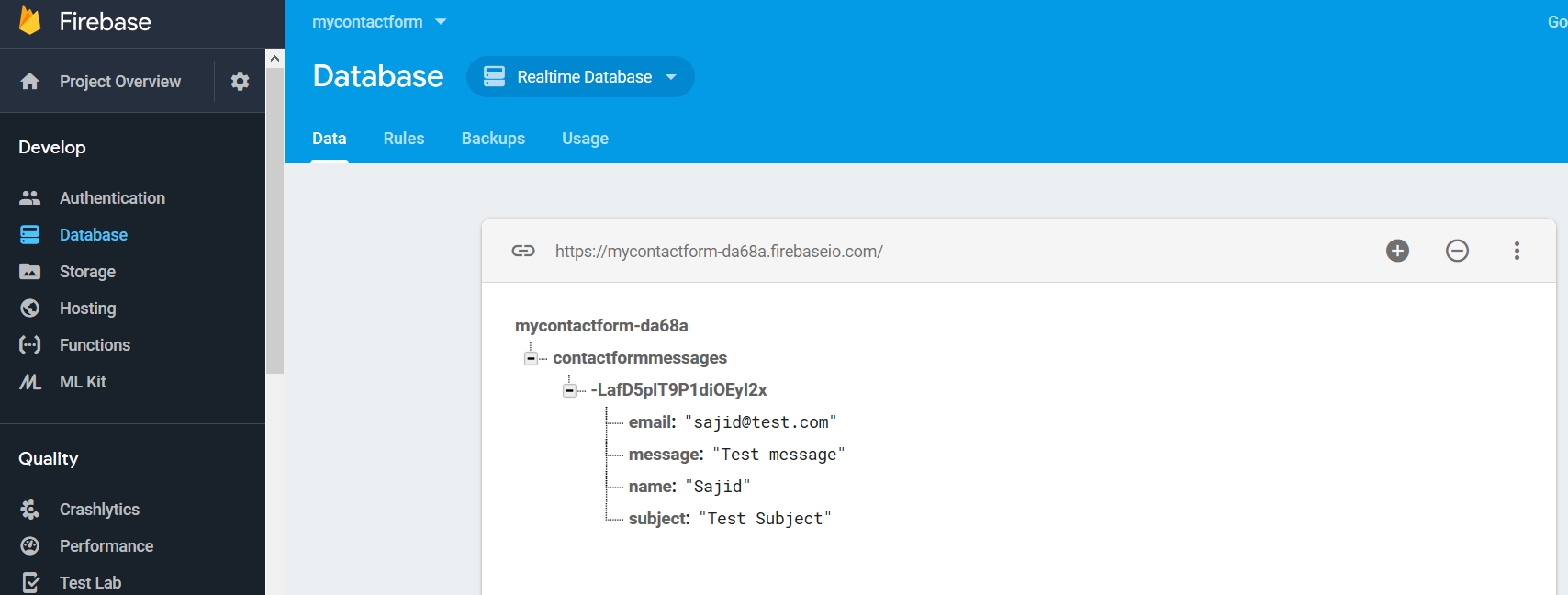

Svelte Web Application

Building a full stack web application from scratch, using html/css and google firebase.

Building a full stack web application from scratch. Using svelte, google realtime firebase and storage as well as html/css bootstrap.

Please note that the screenshots shown are limited to protect IP of the company.

Working closely with a graphic designer for the Front End, I designed the website based on graphics and figma file provided to me by the graphic designer. Multiple iterations of the desing of the website was required in order to get the website to look as intended.

For the backend, utilizing a realtime database to populate and dynamically update the web application, based on a unique identifier to search from the database.

The website would then take the data and display it according to the corresponding unique identifier, dynamically updating and returning a 404 page if the entry was not found in the data base.

To be completed…

Libraries:

Some of the tools required to make this project work:

Svelte - Svelte is a free and open-source front end compiler for ease of use of HTML and CSS.

Bootstrap - Bootstrap is a free and open-source CSS framework directed at responsive, mobile-first front-end web development. It contains HTML, CSS and JavaScript-based design templates for typography, forms, buttons, navigation, and other interface components

Figma - Figma is a collaborative browser-based interface design tool, with additional offline features enabled by desktop applications for macOS and Windows.

- Acrylic Robotics

- Web Development

My Resume

For most recent resume please send an inquiry email.